Your voice is no longer yours—unless you act fast.

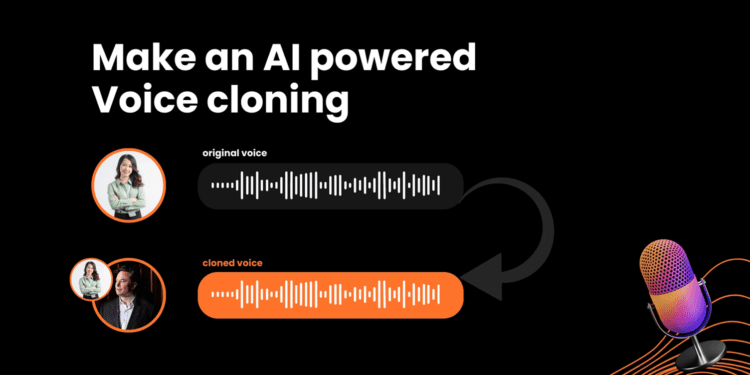

The rapid advancement of AI-driven voice cloning technologies has introduced a disturbing new reality: In just three seconds, an AI can now replicate your voice with chilling accuracy. This seemingly benign development has serious implications, especially in the realm of scams, elections, and identity theft. The rise of voice deepfakes poses a significant threat to personal security, and combating them requires vigilance and advanced tools.

AI voice cloning tools have made it easier than ever for criminals to impersonate individuals—whether for financial fraud, political manipulation, or even social engineering attacks. Imagine receiving a phone call from someone who sounds exactly like your boss or a family member, only to find out later that it was a fraudulent attempt to steal sensitive information or money. The risks are very real, and the potential for abuse is vast.

So how can you protect yourself? AI detection tools are emerging to help identify voice deepfakes, such as those developed by OpenAI’s codebreaking team and cybersecurity experts. These systems analyze audio patterns, speech irregularities, and inconsistencies in tone or cadence that may indicate a synthetic voice. Additionally, cybersecurity measures like multi-factor authentication (MFA) and voiceprint technology can add layers of protection, making it harder for malicious actors to deceive you.

As the fight against deepfakes intensifies, it’s clear that voice cloning is no longer a futuristic problem—it’s here today. Your voice, once a unique identifier, is now a potential vulnerability. But with the right precautions and technological safeguards, you can fight back and retain control over your identity.